Methodology

State of the art software to predict football outcomes and profitable distinct approaches, based on machine learning techniques.

1. Machine Learning

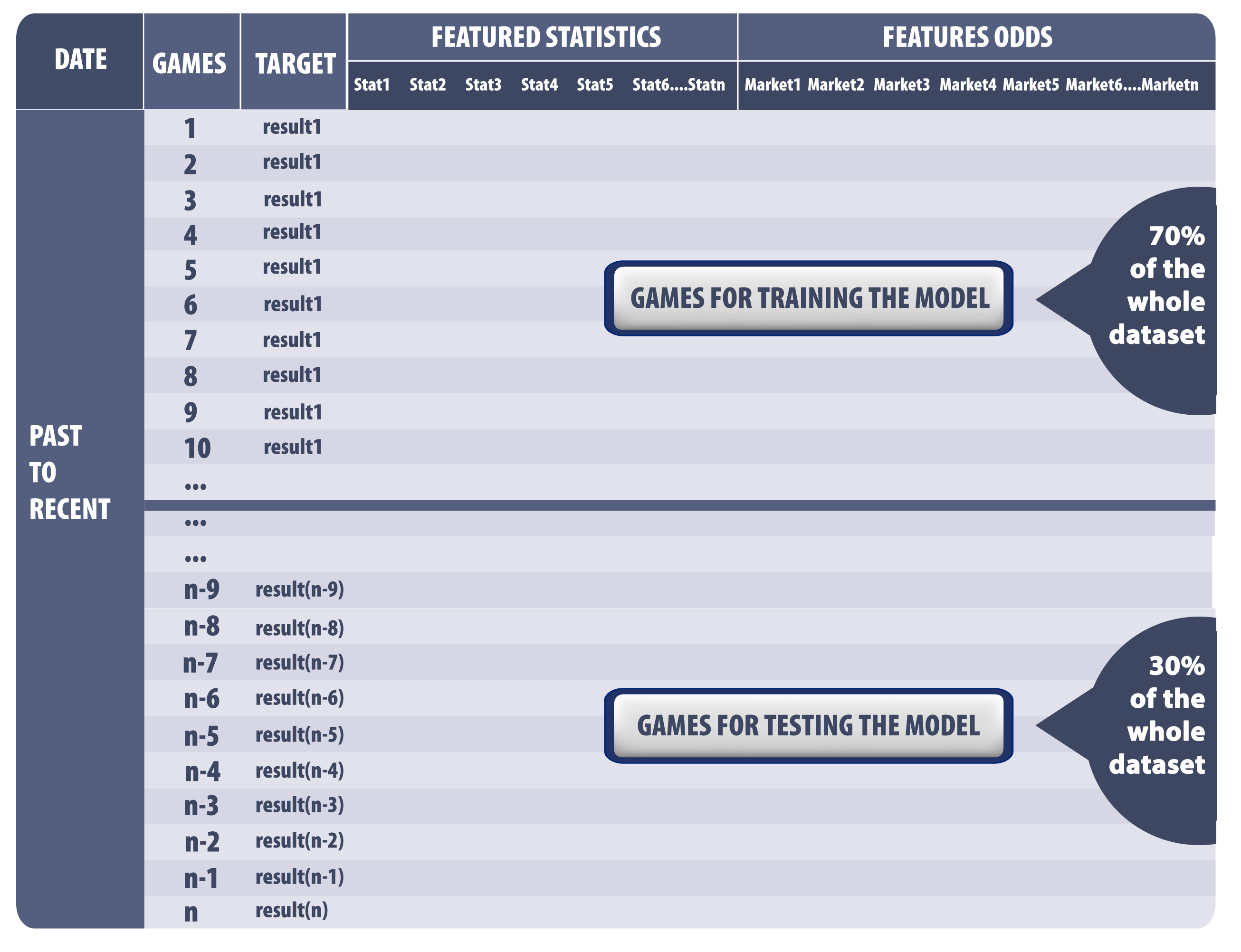

Starting off, the prediction model used as part of the system built by users, makes use of a Random Forest classifier model (more algorithms potentially to be added in the future). In order to train and test such a model, a matrix of data (constructed from the relevant selections of the user) is used, that closely resembles the one presented in photo 1. The table include the dataset of all past games selected by the users in a chronological order. The target column is the game outcome we want to predict. Columns of features statistics includes the stastistic values for each of the team concerning previous games in a number selected by the user. Columns of features odds are the book odds prices of previous games concerning the market selected by the user.

After a train/test split is created in a portion of 70/30, the model is trained using games of the train set, while the test set is kept for testing or simulation purposes. For testing, while initial thoughts could be to just use the previously trained model to make predictions and judge them on known results, futbolbrain uses a better approach that is more closely resembles the day-to-day operation of the model. As such, we came up with a “progressive” training plan, in which the test set is split into numerous blocks (representing weeks) for which an iterative process takes place where at each step one block is added to the train set, the model is re-trained and the next block is used for prediction. The process repeats until no blocks are left to test, abiding to the temporal requirements of the problem at hand. The “progressive” training process is illustrated in photo 2.

2. Unbalanced Data

Another strong point of our methodology, concerns unbalanced data points. Specifically, some markets outcome happen less often than others (eg Under 0.5 goals happends less than 10% in a season league etc), creating results where specific outcomes are only found in a small portion of the samples and thus do not make for representative examples of the problem. The former can lead to poor modelling and severely deteriorate the performance of our predictor model. As such, re-sampling techniques are used. Specifically, when data sets are detected to be unbalanced – further than a tolerated degree- SMOTE functions are applied to re-sample the data by creating synthetic samples of the most rare-occurring examples. This way, better results can be achieved, by better modelling “hard” cases where simple not enough data are available.

3. Fine-tuning

As machine learning approaches seek to better model problems given only a limited amount of samples and features, hyper-parameter settings depending the algorithm being used is important. To that end, the latest function of the platform allows the use of fine-tuning techniques, which aim to find the best-performing hyper-parameter settings combinations. A work in progress, early results show promising improvements over the default behaviour with more accurate predictions that in turn lead to higher profitability for the systems that employ them.